[Week 04 / Day 015] 학습 기록

[Week 04 / Day 015] 학습 기록

📝 오늘 한 일

- 강의 수강 및 퀴즈

- [DL Basic] 7, 8강 실습

- [DL Basic] RNN 퀴즈

- 기본 과제 완료

- 멘토링 (논문 스터디)

- 코딩테스트 문제 풀기

👥 피어세션 요약

키워드 공유

- attention

- transformer의 세 가지 multi-head attention

- LSTM의 장점

- Vanilla RNN

- backward pass에서 weight matrix W가 계속해서 곱해져서 vanishing & exploding gradients 문제가 발생할 수 있다.

- gradient clipping과 같은 방법으로 개선할 수 있으나, 한계가 있다.

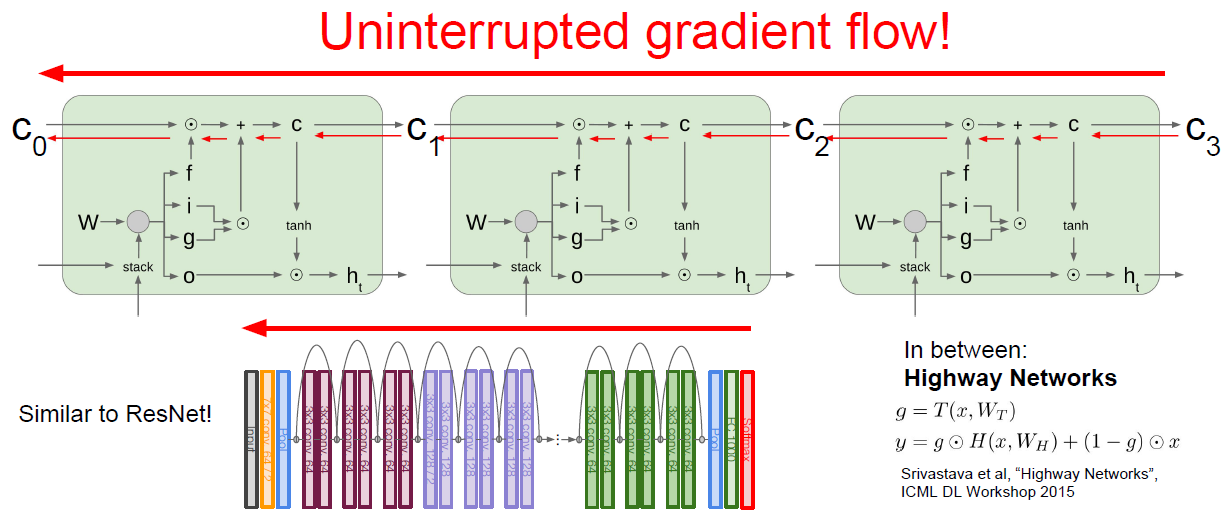

- LSTM

- cell state를 계산하는 식과 hidden state를 계산하는 식 모두 element-wise multiplication을 사용한다.

- cell state의 backprop이 그저

(upstream gradient) * (forget gate)가 된다.- 이렇게 곱해지는 forget gate는 매 step 마다 바뀌기 때문에, 동일한 weight matrix가 곱해져 문제가 발생했던 Vanilla RNN에 비해 낫다.

- 또한, forget gate는

Sigmoid값으로 element-wise multiplication이 0 or 1 값이기 때문에, 이를 반복적으로 곱했을 때 더 좋은 수치적 특성을 보일 수 있다.

tanh를 매 step마다 거치는 것이 아니라, 단 한 번만 거치면 된다.- Vanilla RNN의 backward pass에서는 매 step에서 gradient가

tanh를 거쳐야 했다.

- Vanilla RNN의 backward pass에서는 매 step에서 gradient가

gradient가 모델의 끝인 loss에서 cell state \(c_0\)까지 흘러가면서 방해를 덜 받는다.

⇒ ResNet의 skip connection == LSTM의 element-wise multiplicationbackprop through cell state는 gradient를 위한 고속도로

- Vanilla RNN

- (강의 추천) AutoEncoder의 모든것

코딩테스트 스터디

- [BOJ] 원숭이 매달기

🏫 멘토링 요약

수업 관련

- MHA의 각 attention head가 각기 다른 요소에 대해 attention을 갖는 원리가 무엇인지 궁금하다.

- 따로 원리가 있다기보다는, 실제로 해보니까 잘 된 것이라고 생각하면 된다.

- AI 분야에서는 이런 느낌인 게 은근히 많다.

- Positional encoding

- 이것 또한 큰 의미가 없다. 그냥 여러 position을 다르게 encoding 하기 위한 것이라고 생각하면 된다.

- 이것 자체도 학습이 가능하다. (trainable)

- transformer는 input 길이가 고정된 값이다. 대신 엄청 크다.

- ResNet에서 skip connection으로 residual만 학습시키는 것의 원리

- 연구자들이 ‘잘 될 것 같아서’ 시도해 본 것이 실제로 잘 된 케이스이다.

- 후속 논문들에서 왜 잘 되는지에 대한 연구가 이뤄지기도 한다.

- ResNet 계열의 모델은 범용성이 좋다.

- ResNet, ResNext, Res2Net, ResNest

- MixUp, CutMix 등의 data augmentation 방법론

- 각 방법이 효과적인 문제의 유형 정도만 알고 있어도 충분하다.

- SGD와 Adam

- SGD - 초기

lr값에 너무 민감하다.- 최적의

lr값을 찾는 것이 관건이다. - local minimum에 도달하더라도 flatter 한 것에 도달하게 된다. train과 dest 차이가 적으므로 test accr가 더 좋다.

- 최적의

- Adam -

lr을 덜 섬세하게 잡아도 웬만큼 good- adaptive ⇒ 수렴속도 faster

- 하지만 여전히 최적의

lr값을 찾는 것은 중요하다. (hyperparameter tuning)

- 두 방법마다 잘 되는 task가 다르다. 실제로는 여러

lr과 함께 Adam, SGD 등의 방법을 모두 조합해서 해본다. - 스케줄러도 매우 중요하다.

- 초반에는

lr을 크게 주어야 local minimum에 빠질 위험을 줄일 수 있다.

- 초반에는

- 경험적으로 해야 한다. (이미 잘 알려진 정보들을 이용할 줄 알아야 한다.)

- SGD - 초기

심화 과제 관련

transfer learning 심화 과제에서 이미지 셋을 쓴 경우와 안 쓴 경우의 차이가 컸는가?

⇒ 차이가 크지 않았다. (transfer learning의 효과가 크지 않았다.)

- transfer learning의 효과가 크지 않았던 이유

- 기존 학습된 데이터(source data)와 추가 데이터(target data)의 domain이 너무 달랐다.

- target data가 충분히 많았다.

- transfer learning이 효과적이려면?

- src data와 target data의 domain이 비슷해야 한다.

- target data가 적을수록 효과적이다.

- transfer learning에 대한 이야기

- weight의 관점에서 보면, training을 많이 시키면 시킬수록 원래 지식을 잃어버리게 된다.

- 언제 fine-tuning을 멈춰야 하는지, freezing이 중요하다.

- 현업에서는 모델을 scratch부터 만들기보다는, 오히려 transfer learning을 많이 사용한다.

- 경험적으로 접근하는 것이 좋은 것 같다.

- hyperparameter tuning 시, 아직은 Ray와 같은 툴보다는 사람이 더 나은 것 같다.

🐾 일일 회고

정신이 없는 하루였다. 강의에서도 정보가 쏟아지고, 피어세션에서도 정보가 쏟아지고, 멘토링에서도 정보가 쏟아지는 신기한 하루였기도 했다. 특히, 실습을 하면서 정말 많이 배운 것 같다. 아직은 dimension 계산이 익숙하지 않아 허둥대지만 차근히 한 줄씩 뜯어보려 한다. 그런데 시간이 있을까..? 🥲

🚀 내일 할 일

- 강의 수강

- [Data Viz] 5-1, 2, 3강

- 오피스아워

This post is licensed under CC BY 4.0 by the author.